Throughout the last few years, I've been fortunate to do lots of incredible things at Virginia Tech. But, I'll be honest when I say I'm probably most proud of what I'll be talking about in this post. Humbly, it's pretty slick!

Background and Goals

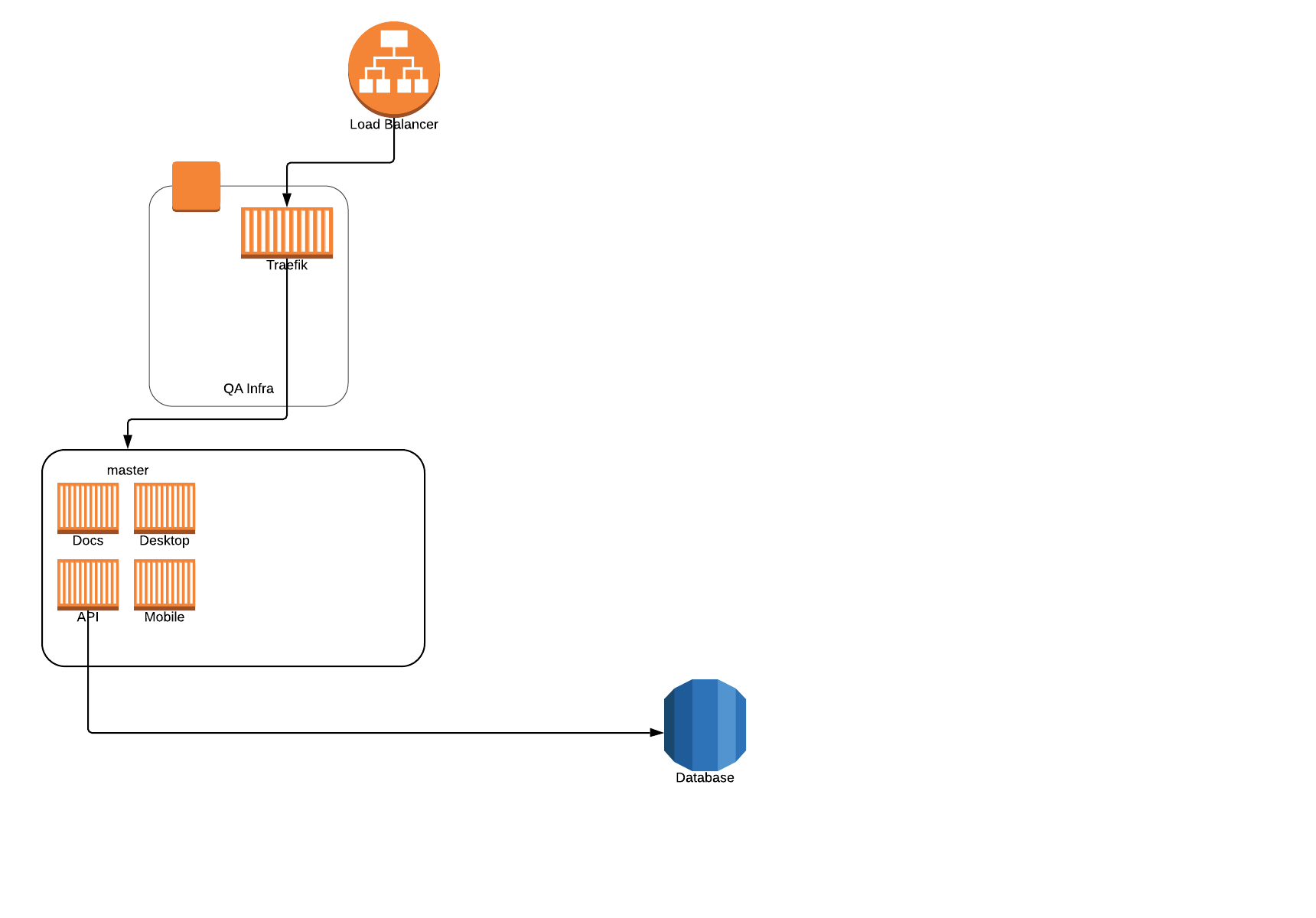

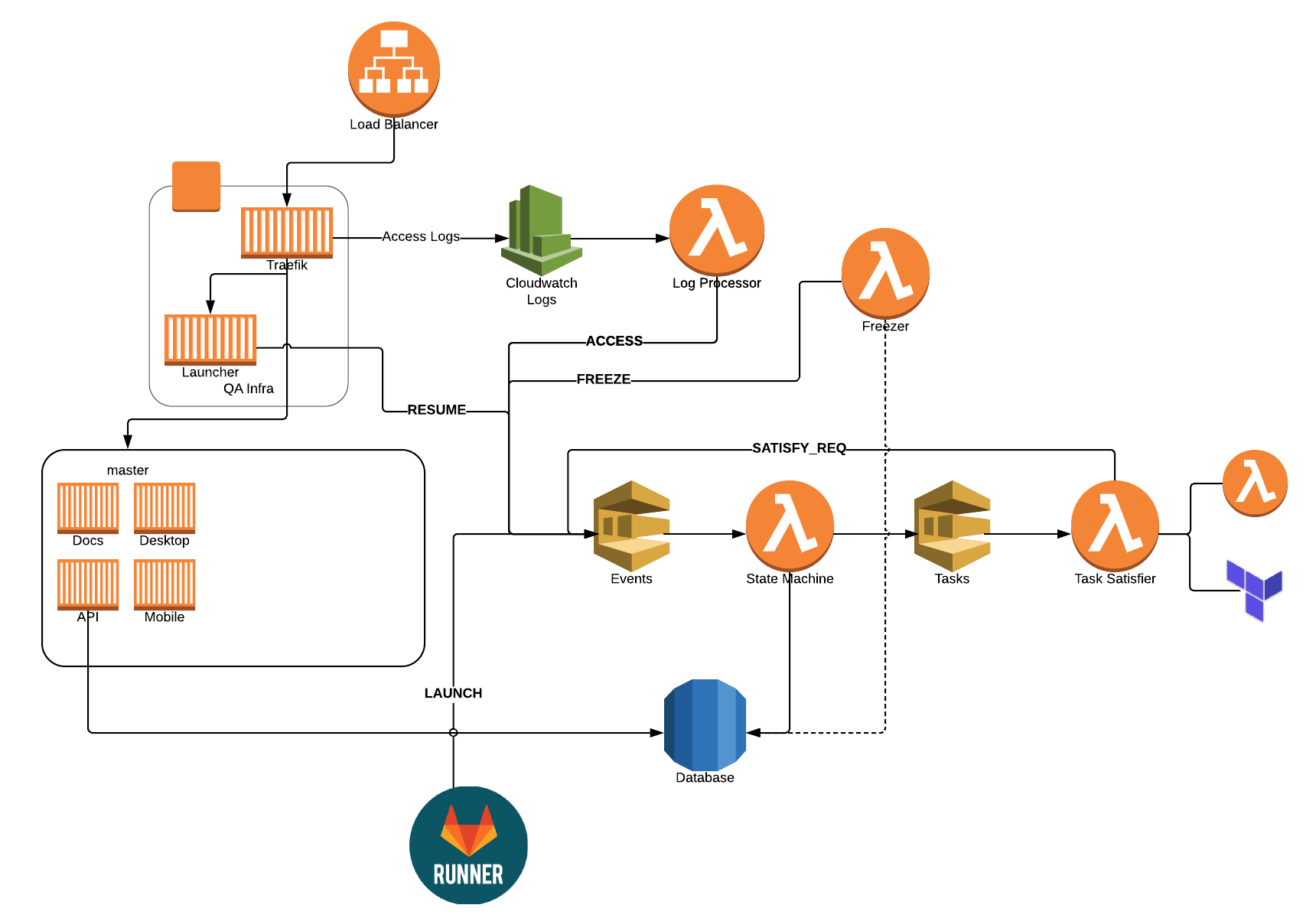

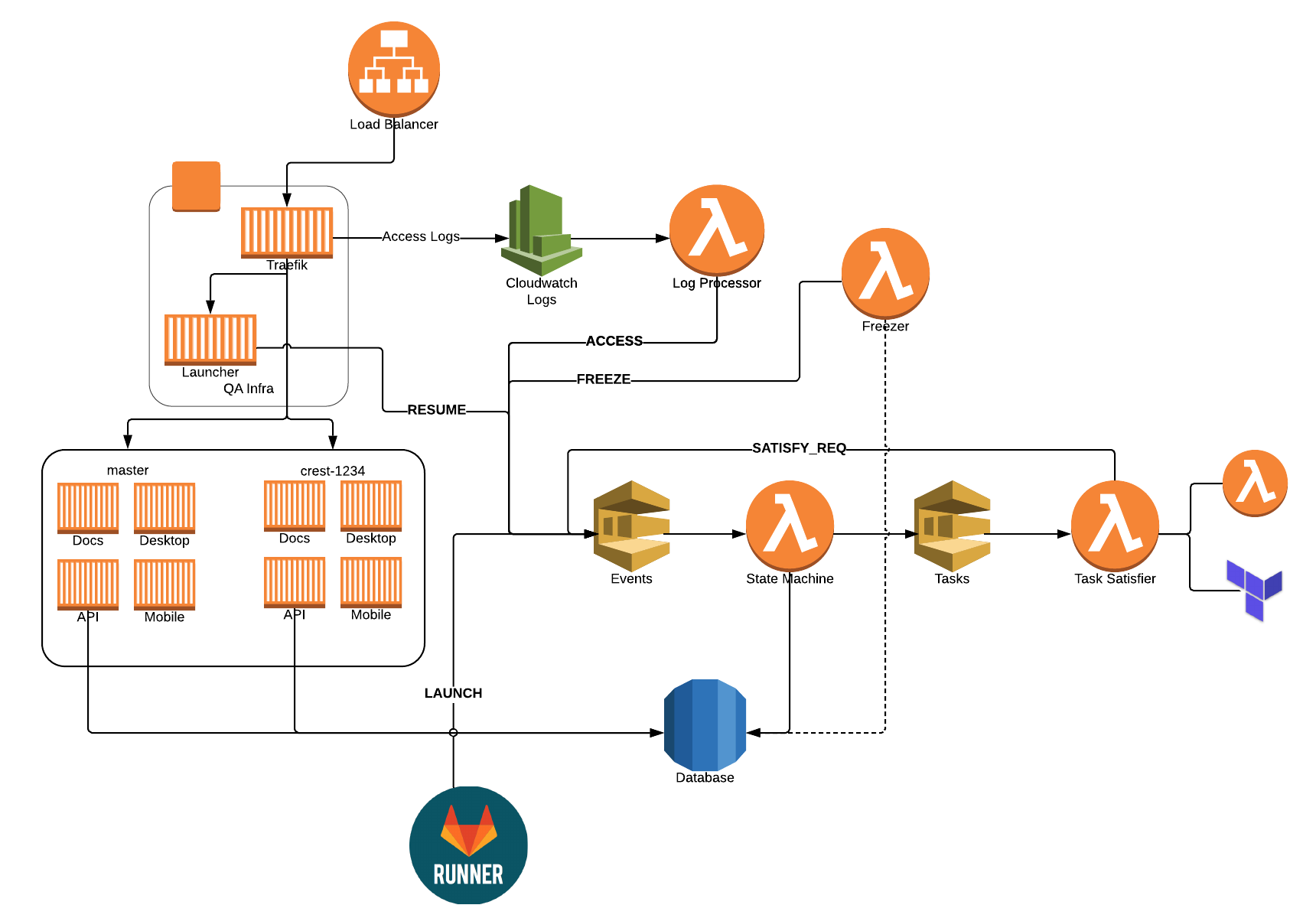

I've written about our QA setup before, but we've continued to evolve it over the years. About six months ago, we started to run into scaling problems, as we were running everything locally on a big single-node Swarm cluster. It worked great, but as we scaled up our team, we needed more and more concurrent stacks. We could have added nodes to our cluster, but we also wanted to move to the cloud.

Rather than lifting and shifting, we really wanted to figure out how to do it cloud-first. How can we leverage various AWS services? What new things can we learn? How do we manage our "infrastructure as code?" In addition, we had the following goals:

- Allow for almost limitless scale - we have no idea how many concurrent features we may be working on, but want to be able to scale

- Minimize cost as much as possible - how can we prevent paying for stacks that are sitting idle and not being used most of the time?

- Be quickly available - if we scale out a stack, how do we get it back as quickly as possible to minimize downtime for our QA testers?

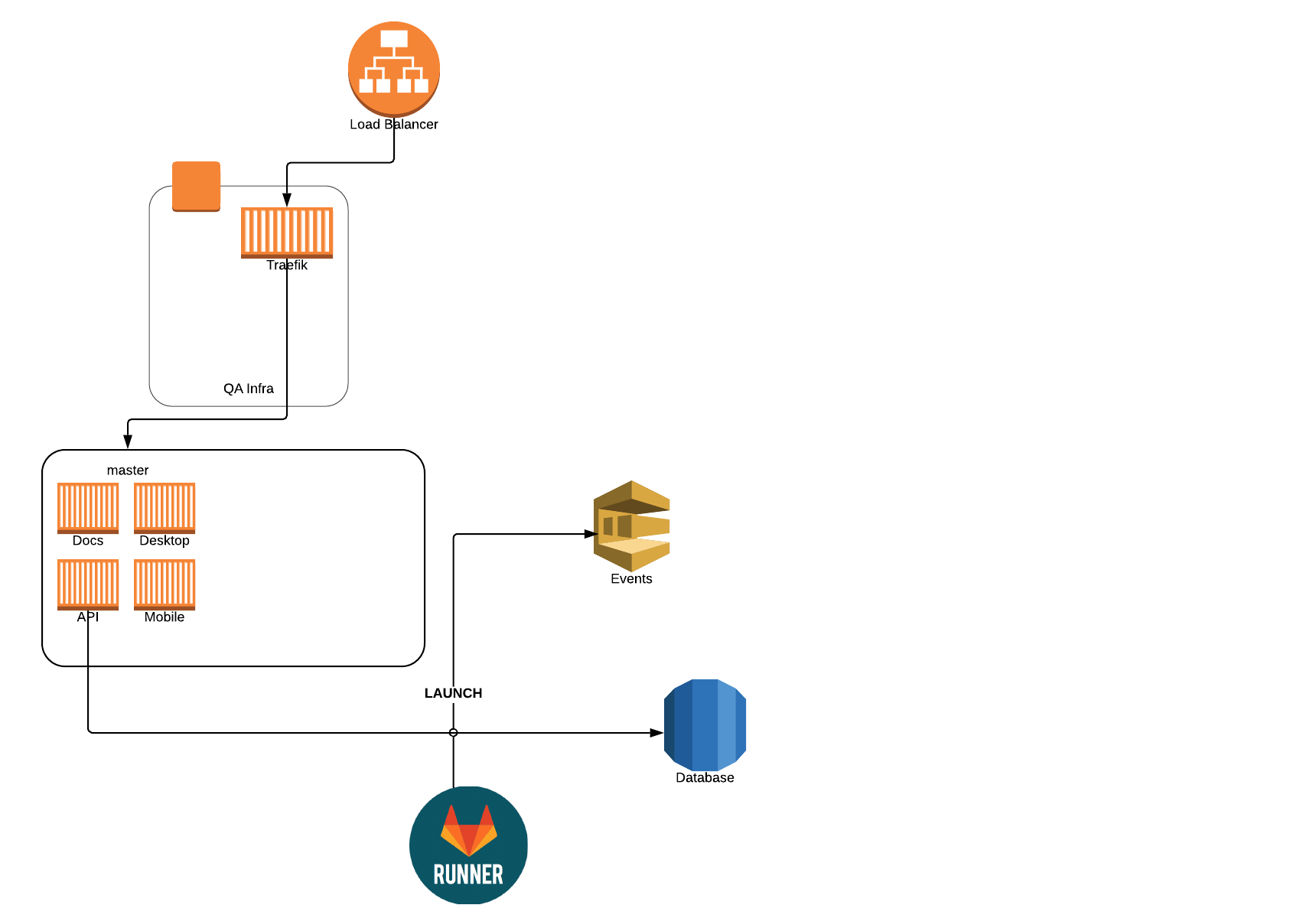

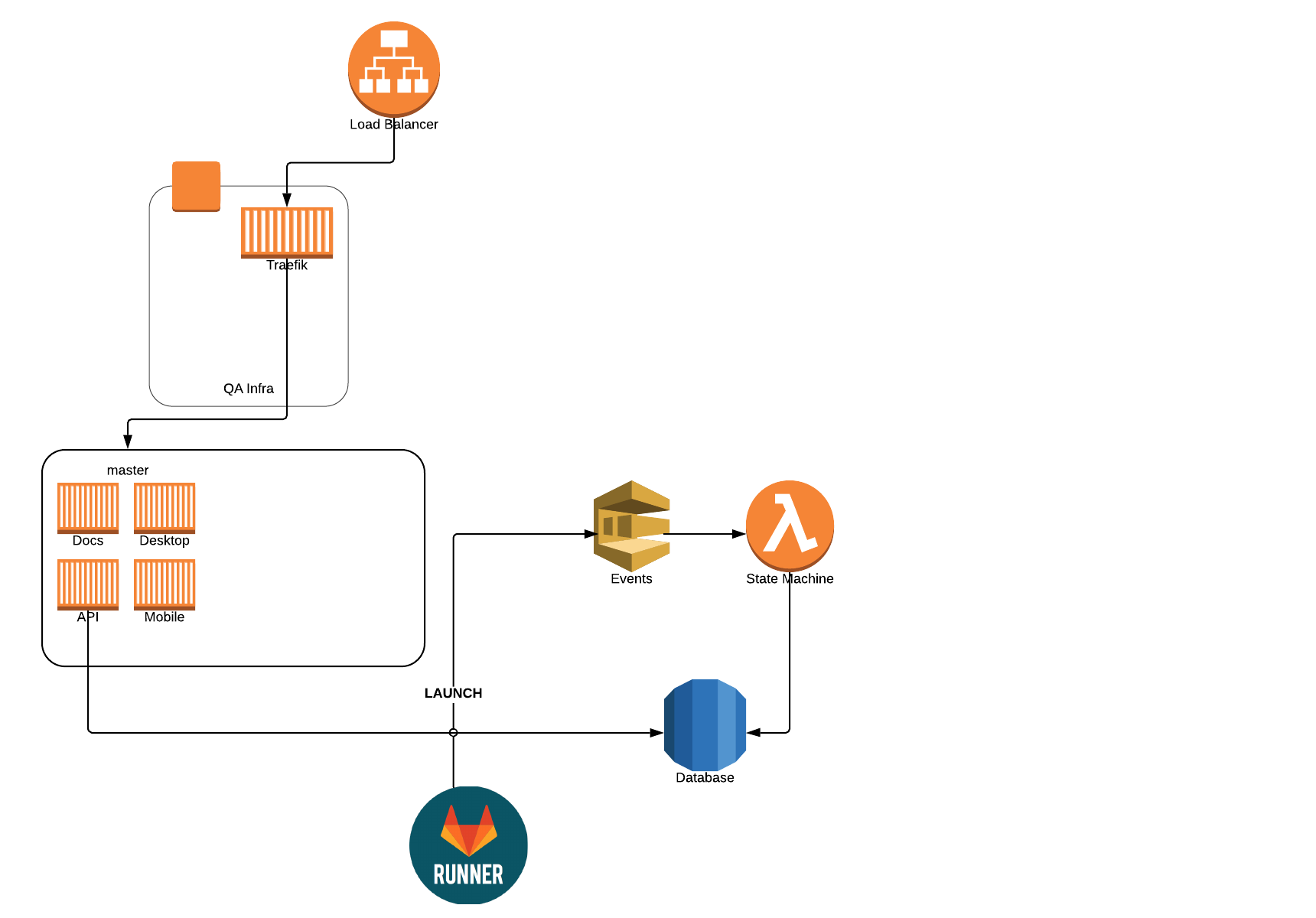

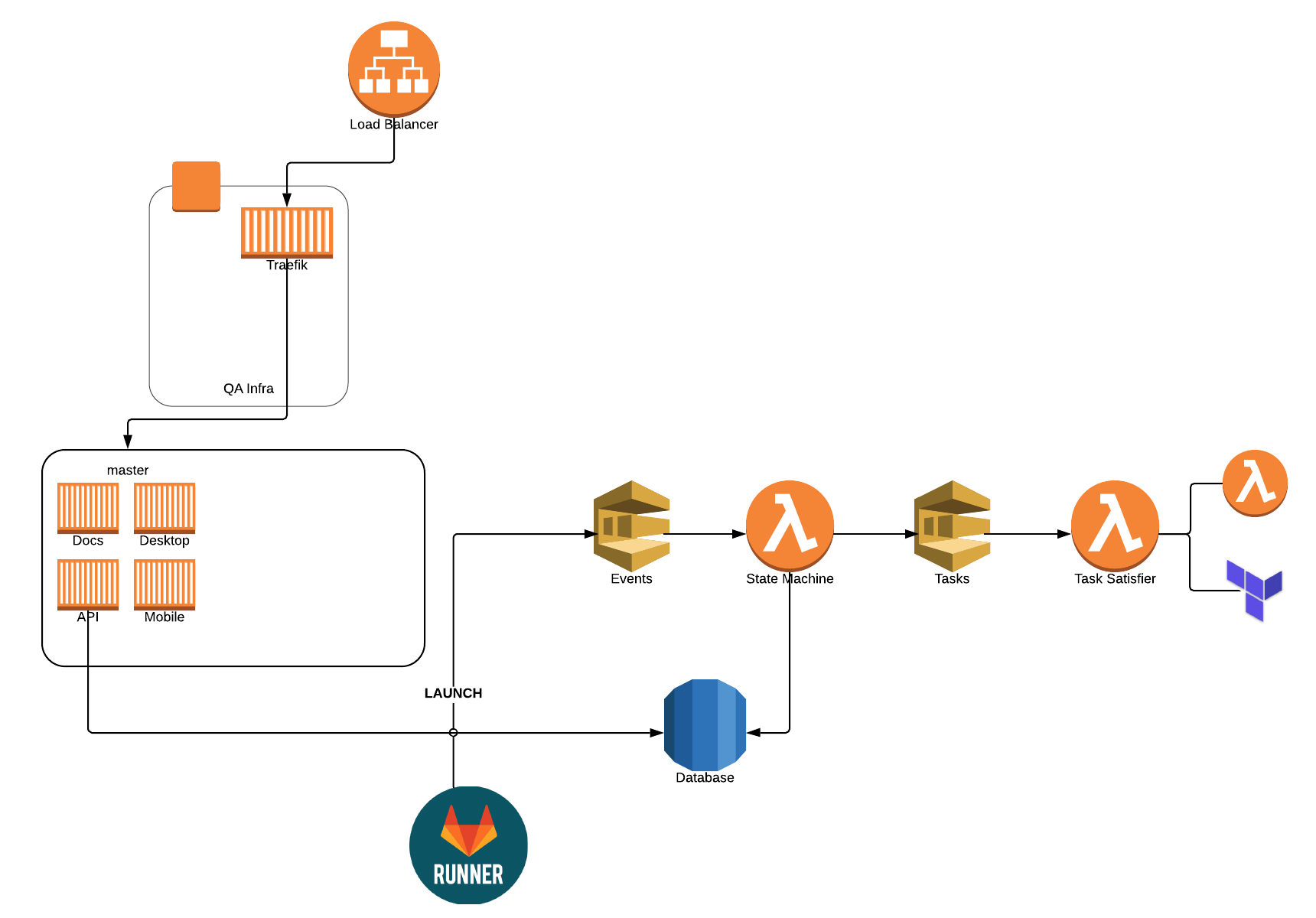

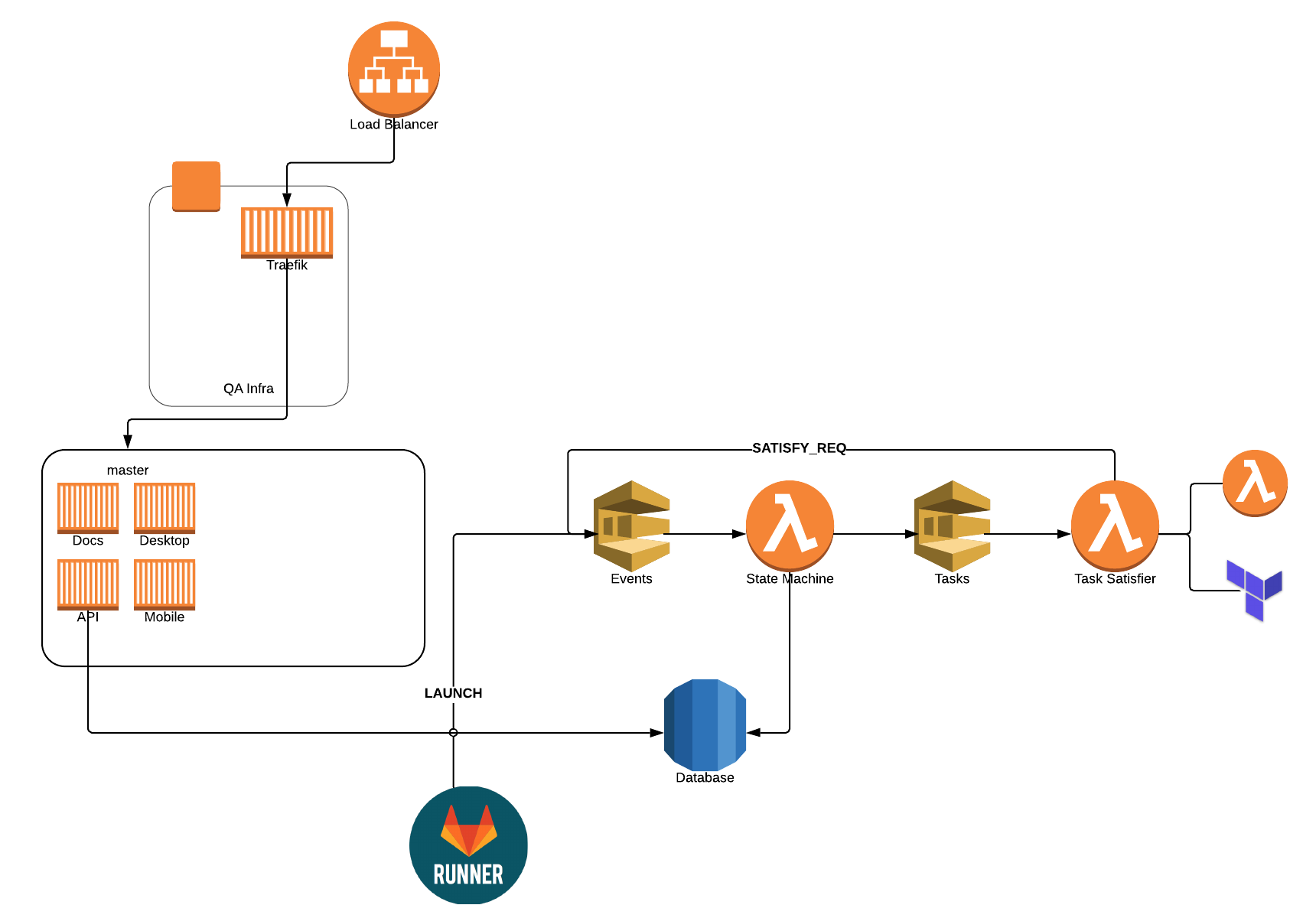

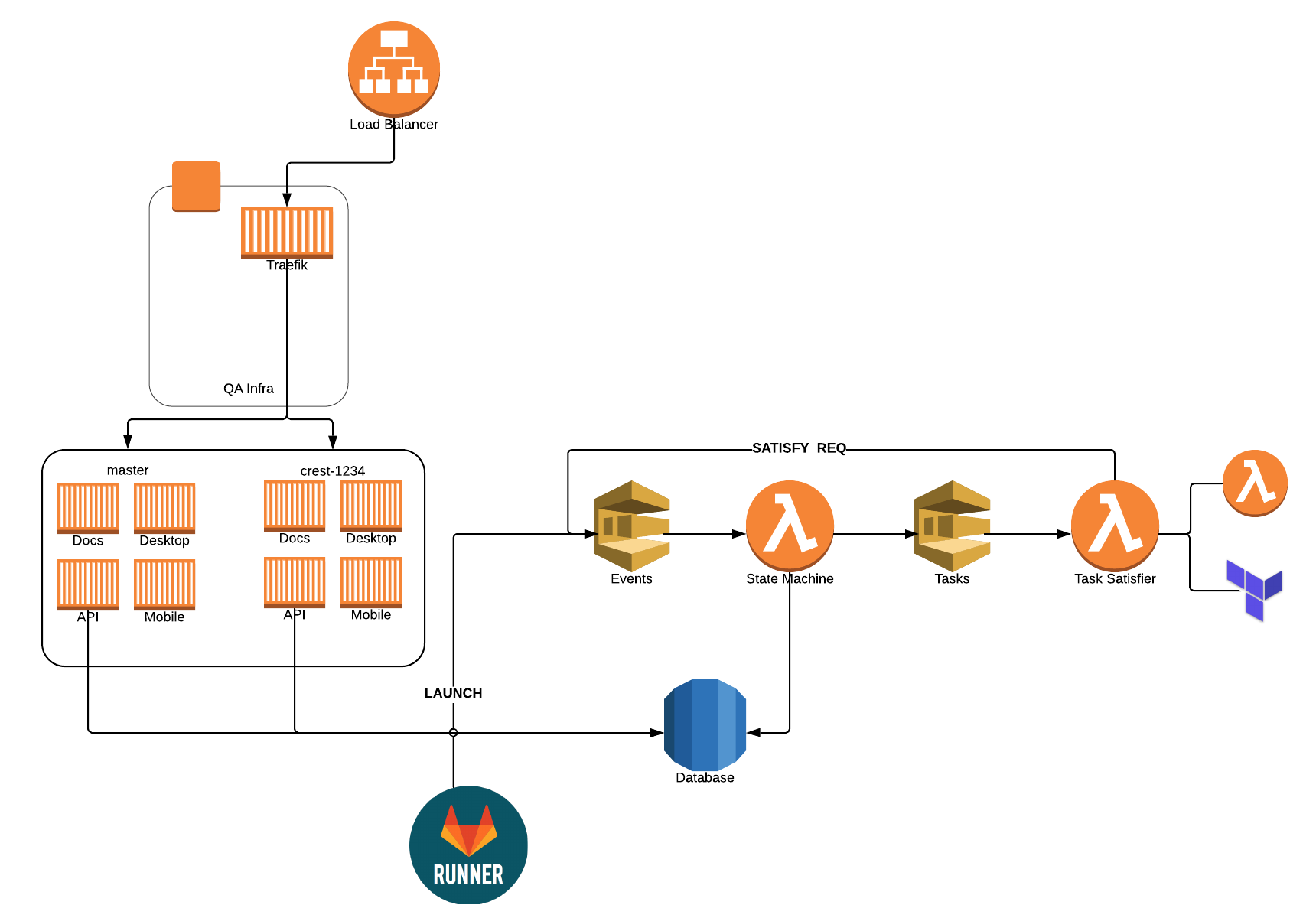

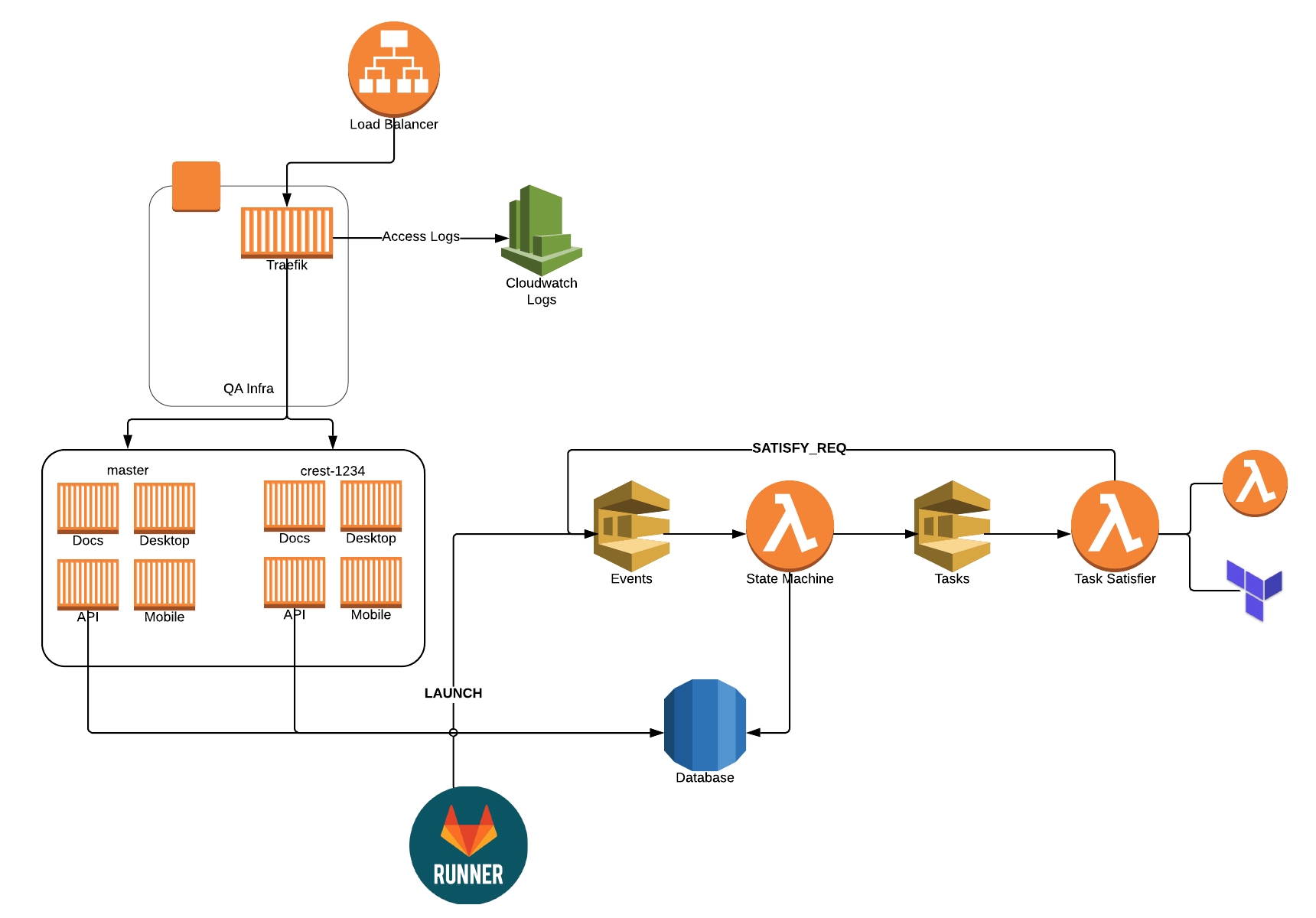

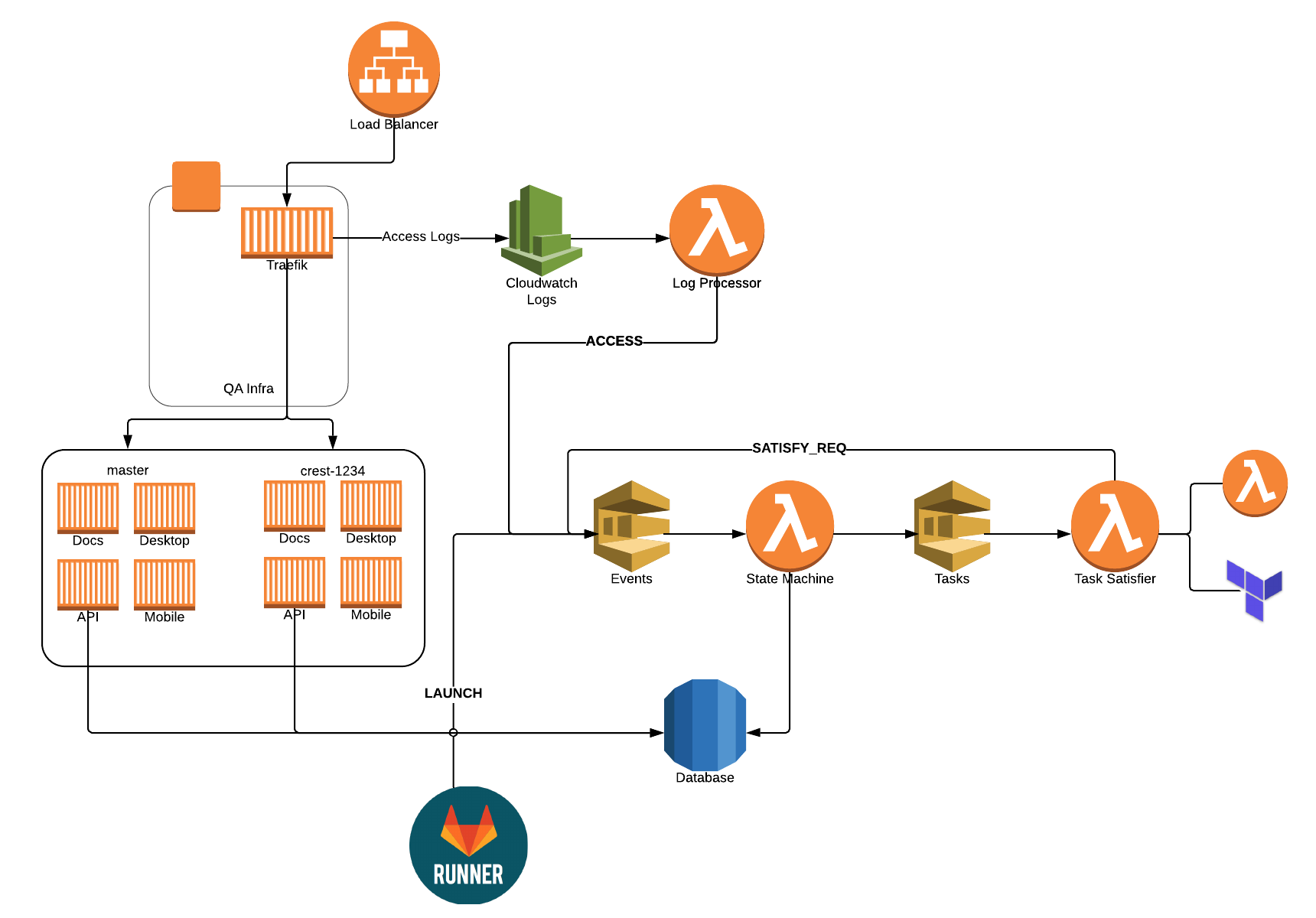

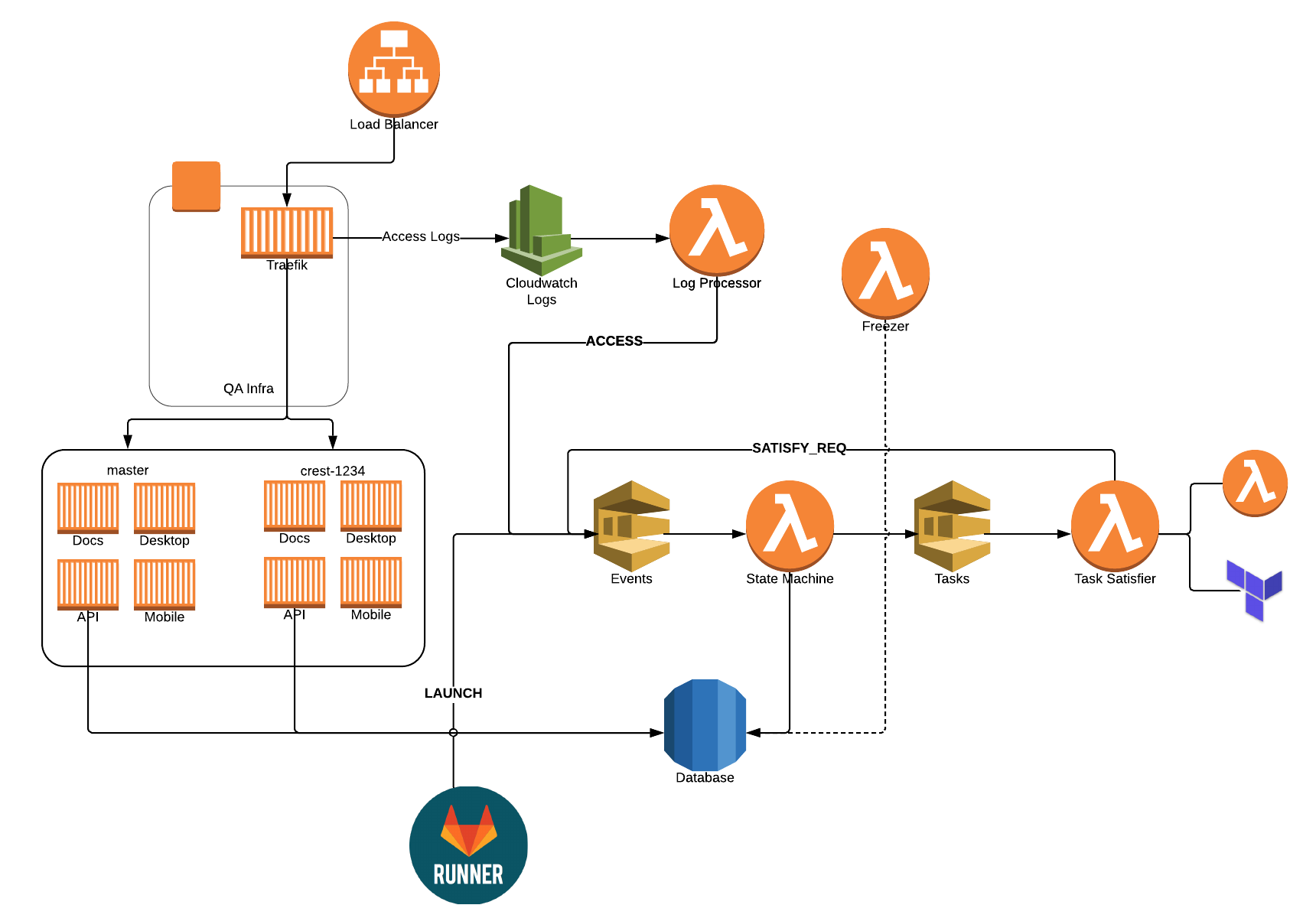

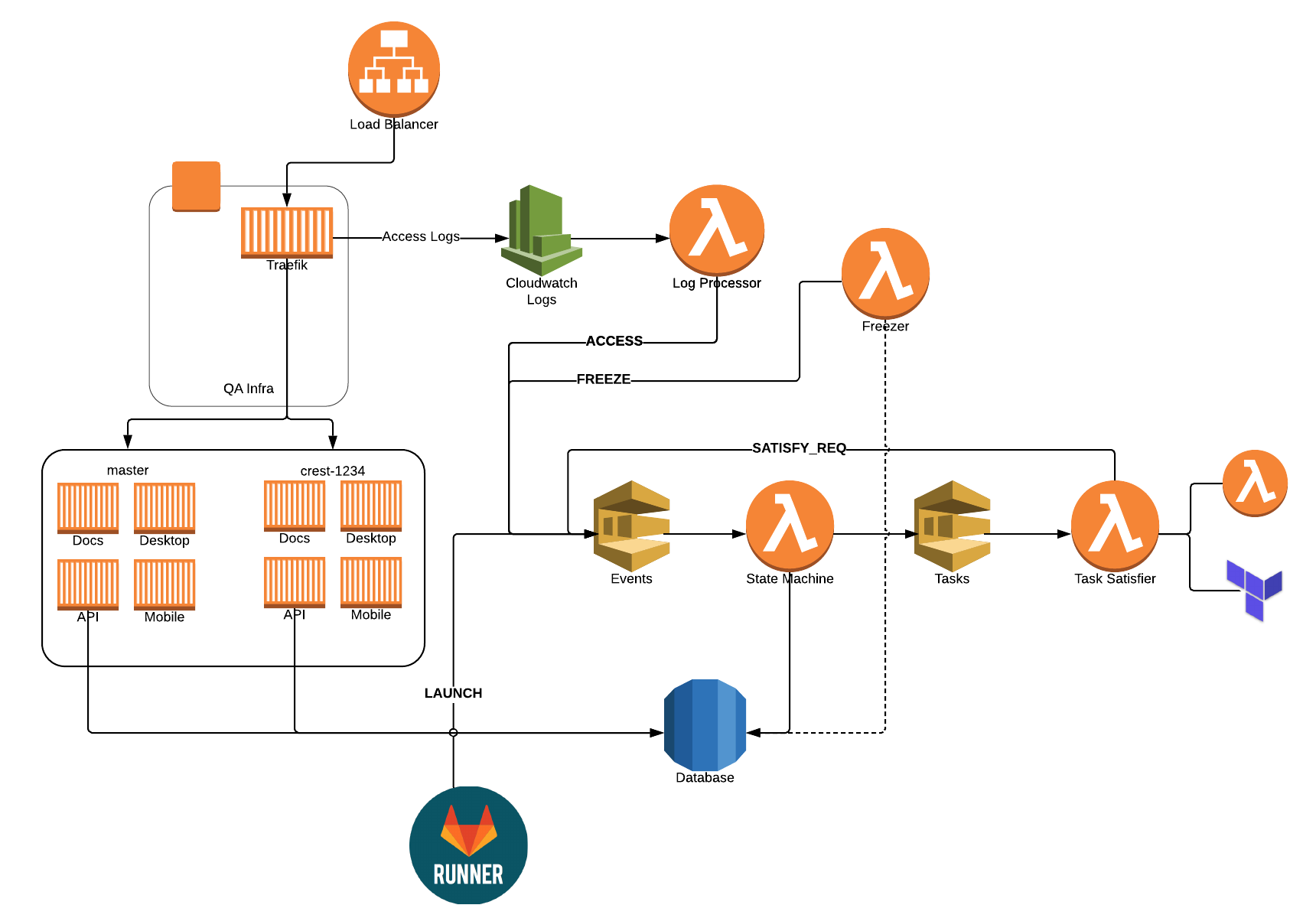

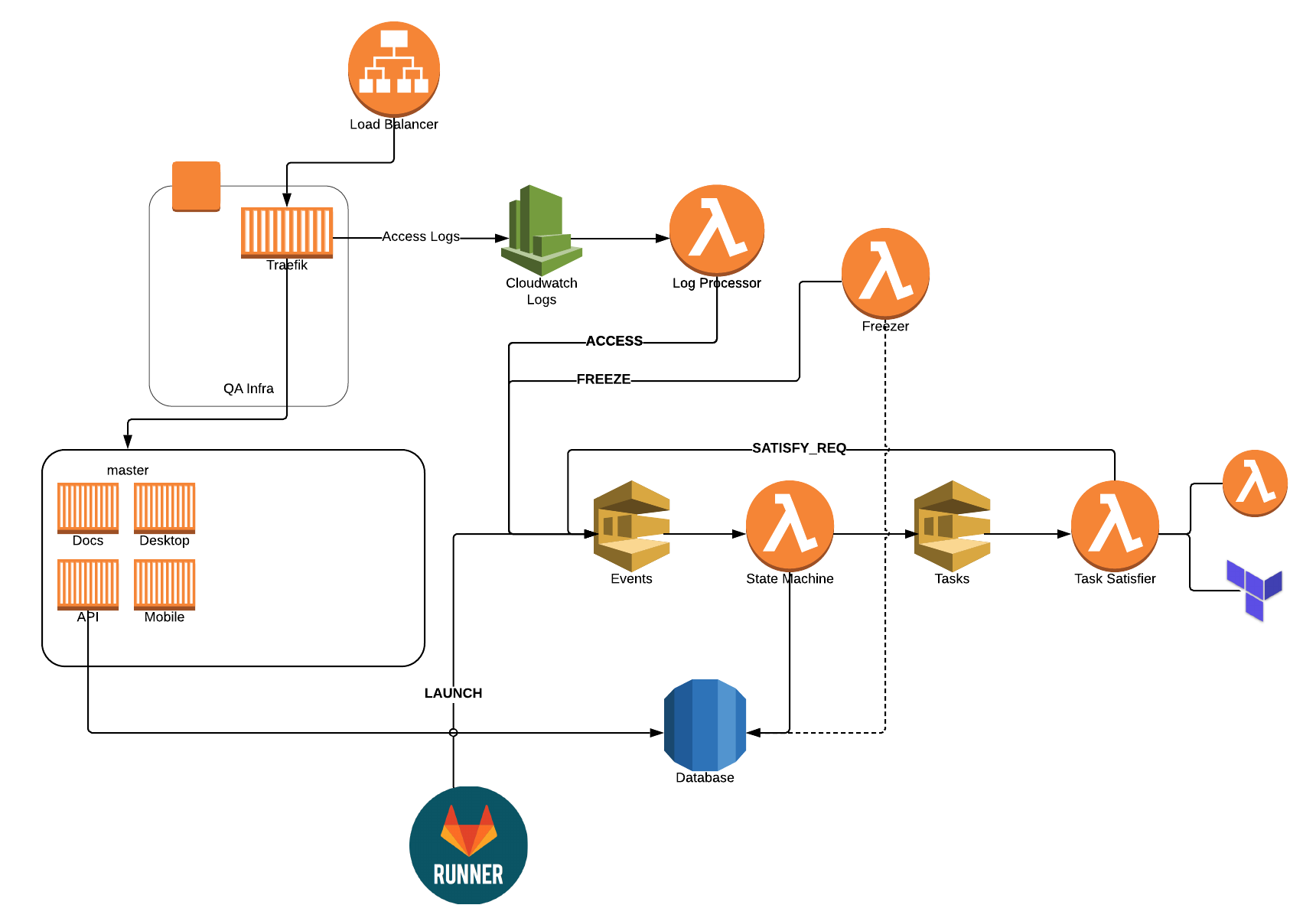

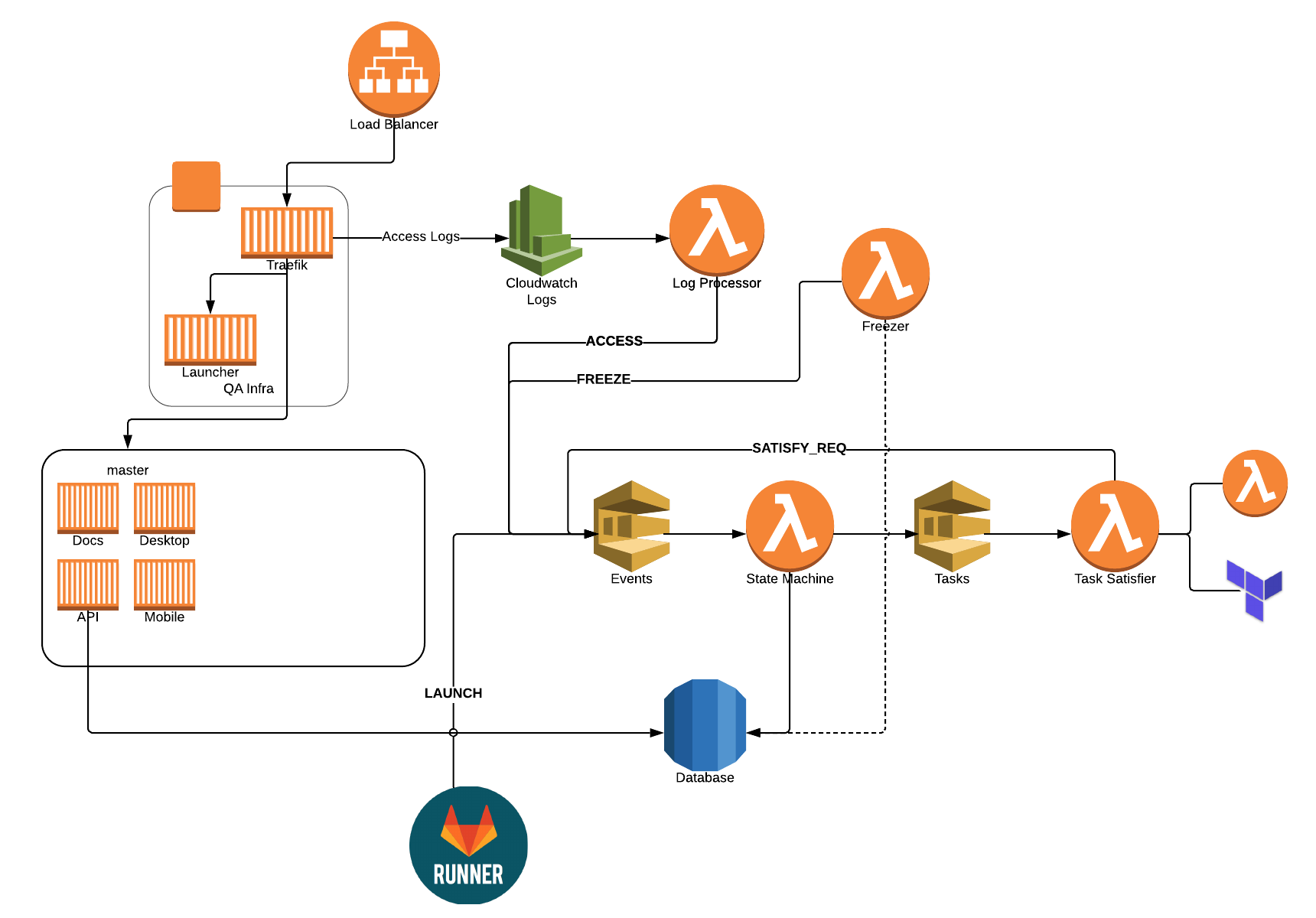

As I presented what we've done to others, I realized the best way to convey the environment was through a story. So, enjoy the slider/carousel below!

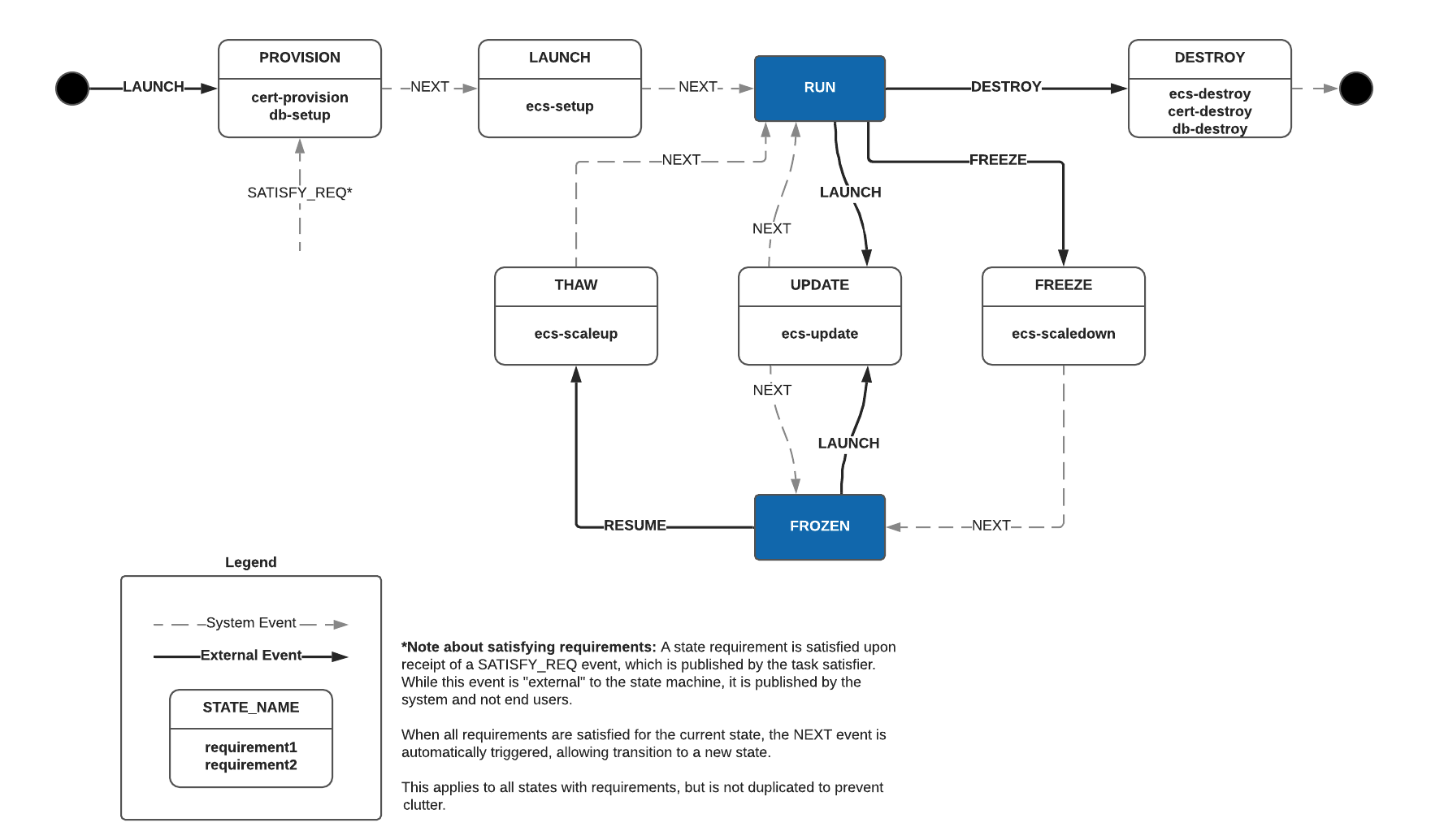

The State Machine

In case you're wondering what the full state machine looks like, here you go! In each state's box, it contains the tasks that are needed in order to advance to the next state. Click on it for a larger version.

Why Fargate?

When we first deployed the new environment back in December, we were actually spinning up an EC2 machine for each and every stack. It worked quite well, but in January, Fargate dropped their prices quite significantly. That made it a no-brainer to switch to Fargate. While no longer needing to manage infrastructure, it has significantly reduced the time to initially launch and resume a stack.

A few takeaways

We learned quite a few things, as this was our first endeavour leveraging this many AWS services and being this elastic and responsive. Here are a few items, in no particular order…

- None of this would have been possible without containers. If you're not using them yet, start now. The stacks are in containers. Traefik and the launcher are containers. It's containers everywhere. That's how you should do cloud.

- We're now big fans of Terraform. We used it setup the base infrastructure and to run several of our tasks.

- Fargate is awesome for on-demand environments that come and go. It's fast. It's quick. There's no infrastructure to worry about! Use it.

- Using SQS (and an event-driven design) was a great choice! If I ever needed to debug something, I could always open the SQS console, send a message manually, see what's in the queue, flush a queue. Make sure you setup a dead-letter queue to know when things fail too!

- Traefik is awesome… duh! We didn't have to think twice and haven't had a single issue with it the entire time.

- We set the concurrency for our state machine function to only 1, which makes a lot of the concurrency issues disappear. Responding to those events are fast, since most of the time is spent actually executing the tasks. For task completion, we let that scale as needed.

- Don't pre-optimize everything. When we thought about running Terraform scripts in Lambda, we were worried about how long it would take. They would easily fit into the time limits, but we were worried about the costs. After running it for a month or two, our Lambda bill hasn't crossed $0.25 for a single month yet. Good thing we didn't waste a lot of time over-optimizing it!

What's it cost? Was it worth it?

Overall, our QA environment has run at just over $100/month. Not bad for a team of six developers who range between 10-15 feature branches concurrently.

We didn't have a cost for what it took to run our QA environment on-prem, but we were frequently spending half a day trying to get the machine back under control due to over-utilization. Seeing that we've yet to spend any significant time in three months of operation in AWS, it's already been worth the effort in time saved.

What next?

We learned even more about how to manage state machines in the cloud using Lambda functions. But, that deserves a blog post of its own. So, be on the lookout for that!